Risk identification is a fundamental phase of a risk assessment. It begins after the system and objectives are defined. Personal experience, rationality and the concept of a credible scenario are important in the context of risk identification. Often, we hear that practitioners consider only credible scenarios in a risk identification exercise.

Today we explore why only considering credible scenarios is a mistake and could lead to disaster.

Credible and Incredible Scenarios

We believe that considering only credible scenarios is censorship. Risk analysis should prioritize the scenarios, but no scenario should be discounted at first. The prioritization resulting from the risk assessment will take care of eliminating far-fetched or meaningless scenarios. That is a result of the analysis, not an arbitrary decision made prior to analysis.

It has been said that it takes 10,000 hours of practice to reach expert status in a subject matter. This is what Malcolm Gladwell discusses in his bestseller Outliers. When an expert says that a failure can’t happen, that a failure mode is beyond belief, it means that it is unheard of during the expert’s 10,000 hours of observation or practice. Those 10,000 hours do not occur with real hands-on experience, and not in the system’s real environment. We all know that detailed environmental conditions change considerably, for example, the likelihood of failure.

As a side note, if one takes ten experts and puts them together, the sum of experience will not alter considerably the final result: they may very well all agree, and erroneously, that a failure is far-fetched because their observation times were simultaneous.

Tailings Dams Failures

Note that, in the context of tailings dams, those 10,000 hours are negligible compared to the collection of every incident over the collection of dams. In fact, 10,000 hours is slightly more than the running time of just one operational dam per year (8,760 hours), which in turn is negligible compared to the 3*107 collective operational experiences per year for 3,500 dams. Of those 3,500 dams, there are perhaps 3−4 major failures per year.

3−4 major Tailings Dam Failures per Year

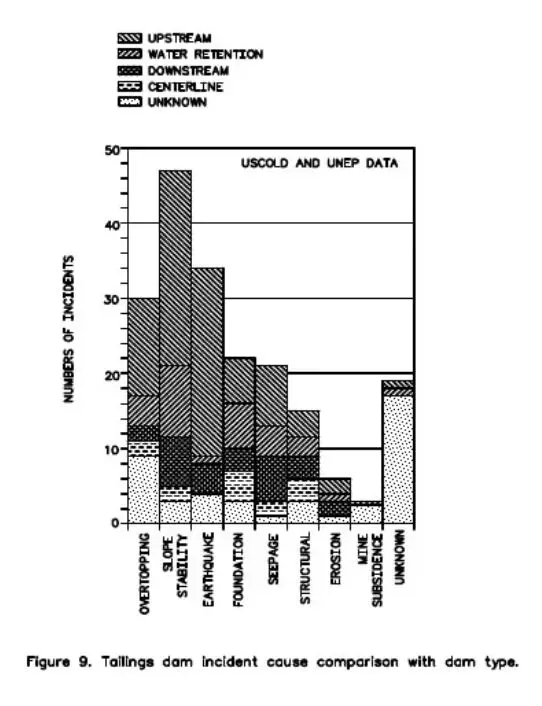

Those 3−4 major failures per year have been painstakingly collected and analyzed over the last hundred years. Publications like ICOLD (2001) (see figure below) attempted to define failure modes for dams of different makes. The large number of unknown causes highlights the uncertainties embedded in the definition of the failure modes. Furthermore, consider for example the slope stability category. How do we know that those slope stability accidents weren’t caused by erosion, seepage, foundation problems or perhaps a small earthquake?